Written by: Alfredo Privitello

Would you like to suggest ideas to your favorite company and, hopefully, see them implemented? And you, managers, would you like your online community to provide you with new ideas? What about you, students, would you like to know more regarding Crowdsourcing? Well, if your answer is “yes”, keep reading this e-paper. In fact, the purpose of the author of this work is to give an overview of Crowdsourcing and its current examples by answering a set of questions (“What is Crowdsourcing?”, “What are the main examples of Crowdsourcing?”, “Is it worth to implement Crowdsourcing?”) with the help of literature.

“What is Crowdsourcing?”

Crowdsourcing is a topic attracting significant attention. However, international journals have published on Crowdsourcing only in recent years (Bayus, 2013; Afuah & Tucci, 2012; Poetz & Schreier, 2012; Stieger et al., 2012; Schweitzer et al., 2012). With regards to the origin of the word, Howe (2006) is the author who labeled and defined the Crowdsourcing concept. Specifically, Crowdsourcing is the process of gaining needed contents, services or ideas by asking for contributions from an online community, instead of involving solely typical suppliers or employees (Howe, 2006). Since Crowdsourcing is a current topic of research, it is possible to find in the literature numerous definitions and the list keeps on growing. For example, González-Ladrón-de-Guevara and Estellés-Arolas (2012) collected, at least, 40 definitions of Crowdsourcing.

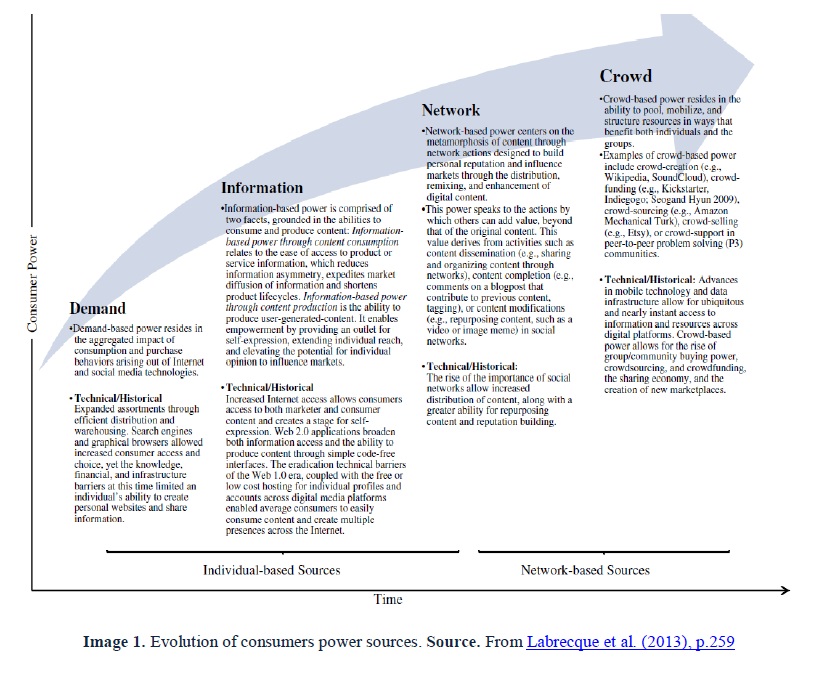

With regards to companies, several studies underline how both small and large firms are increasingly collaborating with external sources for idea generation (Dahlander & Wallin, 2006; Chesbrough, 2003). For example, organizations such as Dell, Procter & Gamble, Microsoft, Starbucks, Lego, etc. are already using Crowdsourcing to get new ideas from customers. Specifically, on Crowdsourcing platforms, the idea selection depends on various factors, such as comments, votes, points earned, the number of ideas, possibilities of implementation and consistency with companies’ strategies (Martínez-Torres, 2012; Di Gangi, Wasko & Hooker, 2010; Westerski et al., 2013). Current literature presents two general ways to solicit ideas on Crowdsourcing platforms (Hossain & Zahidul Islam, 2015). The first one is “idea contest”, which means asking external people to suggest ideas within a certain time frame (Hossain & Zahidul Islam, 2015). Once ideas have been submitted, there will be a selection and an awarding of the best ones. The second way to solicit ideas takes the form of “continuous interactions” between companies and crowds. Here crowds are not required to have high expertise, and they can propose ideas based on their mere experience with the companies’ products (Hossain & Zahidul Islam, 2015). For instance, Dell allows users to submit ideas based on their personal using experience. Generally, in Crowdsourcing, active crowds are identified, rewarded and further stimulated in idea generation. As a consequence, companies can manage to increase their innovations success rate and their revenue. Finally, regarding the positioning of Crowdsourcing in the literature (Figure 1), Labrecque et al. (2013) found that this new tool represents one of the latest evolutions of consumers’ empowerment and, specifically, of Crowds-based power sources. With this last term, the authors refer to “the ability to pool, mobilize and structure resources in ways that benefit both the individuals and the groups” (Labrecque et al., 2013, p.264). Finally, they underline how Crowdsourcing combines and amplifies the characteristics of all the previous power forms (demand-, information- and network-based power) which are described in their study and summarized in Image 1 (Labrecque et al., 2013).

Figure 1. Crowdsourcing positioning in the literature Source. Author’s diagram

Image 1. Evolution of consumers’ power sources Source. From Labrecque et al. (2013), p.259

“What are the main examples of Crowdsourcing?”

The following section provides a list of Crowdsourcing main examples. However, it is important to underline that new Crowdsourcing applications are always on the rise. At the time of the present paper, the main examples of Crowdsourcing are the following:

- “Open Source Software”: this example of Crowdsourcing has been a blessing to many people. In fact, it allows crowds to develop new or alternative software to provide an accessible and qualitative solution to all of us. Essentially, in “Open Source Software”, the source code of the program is public and can be used for free. Examples of this application of Crowdsourcing are Mozilla Firefox, OpenOffice, Ubuntu and Thunderbird (Albors et al., 2008). In terms of financial resources, “Open Source Software” projects usually run thanks to small contributions from crowds (Huberman et al., 2009). In terms of quality, “Open Source Platforms” receive continual evaluation, inputs and assessment from crowds. As a consequence, in many cases, “Open Source Platforms” can perform better than commercial products (Wexler, 2011). Finally, companies are also starting to support open source initiatives to increase revenues and boost brand image.

Image 2. Example of “Open Source Software” Source. From Ubuntu (2016)

- “Microtasking”: this example of Crowdsourcing is defined in the literature as a system in which crowds can choose and complete microtasks for non-monetary or monetary rewards (Kittur et al., 2008). An example of “Microtasking” platform is Amazon’s Mechanical Turk which started in 2005. Specifically, the platform coordinates the demand and the supply of tasks requiring human intelligence and contribution from crowds. “Microtasking” platforms are interesting because they allow engagement within crowds/communities, with little monetary costs and a quite fast response time. Generally, the reward for the people completing microtasks is around one dollar each. Moreover, the company seeking for crowds’ contribution can refuse to pay if the quality of the work is poor. In fact, managing the quality of the users’ contributions is crucial in “Microtasking” (Kern et al., 2009). Interestingly, the majority of users completing microtasks are females between 20 and 40 years old (Paolacci et al., 2010; Mason & Suri, 2012). Finally, a system called “txteagle” can allow numerous people to earn money by completing simple microtasks such as surveys, transcriptions and translations even from their mobile devices (Eagle, 2009).

Image 3. Example of “Microtasking” Source. From Amazon’s Mechanical Turk (2016)

- “Idea Generation”: this example of Crowdsourcing mainly takes the form of idea competitions. Essentially, companies request crowds to submit ideas and select the best ones. Beside organizations, several intermediaries have begun to solicit ideas from online crowds in support of companies. The efficacy of “Idea Generation” through Crowdsourcing has also been demonstrated by Poetz and Schreier (2012). According to these authors, crowds can perform better than professionals in several aspects of “Idea Generation” (Poetz & Schreier, 2012). In fact, the collective effort of numerous people is frequently better than the job performed by few experts in “Idea Generation” (Surowiecki, 2005). A certain number of companies (e.g. IBM, Procter & Gamble, Unilever, Starbucks and Dell) have already used Crowdsourcing to find new ideas for their products (Lutz, 2011). Moreover, beside idea competitions, these big companies have also used mobs to get new ideas collectively. Thus, it is possible to state that “Idea Generation” from crowds represents already a good alternative for companies (Poetz & Schreier, 2012).

Image 4. Example of “Idea Generation” Source. From My Starbucks Idea (2013)

- “Citizen Science”: this example of Crowdsourcing is a type of collaborative research which solicits crowds to solve real problems in our world (Wiggins & Crowston, 2011). Essentially, “Citizen Science” relies on people (e.g. scientists) who voluntarily gather and process data to solve certain problems. These people are mostly motivated to join collaborative research by their curiosity, responsibility and personal interests (Rotman et al., 2012). “Citizen Science” projects can cover various disciplines including geology, medicine, environment and ecology (Silvertown, 2009). These projects are typically organized on virtual platforms (e.g. Scientific American) and seem to be able to produce reliable innovations and solutions. Finally, “Citizen Science” can be applied to everyday world problems as well as in more complicated scientific work (Swan et al., 2010).

Image 5. Example of “Citizen Science” Source. From Scientific American (2016)

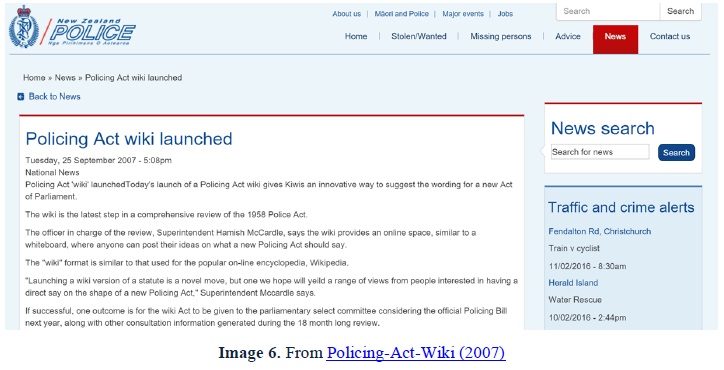

- “Public Participation”: this example of Crowdsourcing is similar to “Citizen Science” but it is considered more suitable for crowds willing to participate in public projects (Hilgers & Ihl, 2010; Brabham, 2009). An example of “Public Participation” is “Policing-Act-Wiki” which was launched by New Zealand Police. Essentially, this form of Crowdsourcing allows voters to contribute to the political dialogue and it helps to develop different types of “Public Participation” (Bugs et al., 2010). Moreover, Crowdsourcing in the form of “Public Participation” permits to engage numerous people and promotes an open dialogue between decision makers and citizens (Bugs et al., 2010). Thus, “Public Participation” can bring benefits to various activities (Adams, 2011).

Image 6. Example of “Public Participation” Source. From Policing-Act-Wiki (2007)

- “Citizen Journalism”: this example of Crowdsourcing represents an alternative to the typical Internet Journalism (Muthukumaraswamy, 2010). Fundamentally, “Citizen Journalism” (e.g. Littera Report) is based on crowds/readers’ donations to reporters. These donations create a solid relation between crowds and journalists, as well as a certain sense of responsibility for the reporters (Aitamurto, 2011). Thanks to “Citizen Journalism”, online crowds/readers can rely on “genuine” information which, overall, also present an acceptable quality (Goodchild & Glennon, 2010). Finally, anyone can typically contribute to “Citizen Journalism”.

Image 7. Example of “Citizen Journalism” Source. From Littera Report (2016)

- “Wikies”: this example of Crowdsourcing involves websites allowing users to collaborate with each other, as well as to edit, add, remove and link other resources or pages (Albors et al., 2008). A notorious example of Crowdsourcing in the form of “Wikies” is Wikipedia (Doan et al., 2011). Another example is Geo-Wiki where a global network of people work to improve the quality of the world maps (Fritz et al., 2009). Moreover, “Wikies” are also used in large firms, non-profit organizations, state bodies and research institutes.

Image 8. Example of “Wikies” Source. From Geo-Wiki (2016)

In conclusion, “is it worth to implement Crowdsourcing?”

The integration of online communities in the innovation processes is fundamental for firms (Enkel, Gassmann & Chesbrough, 2009). Moreover, recent studies demonstrate that crowd’s ideas are often more innovative and beneficial for customers than companies’ in-house ideas (Poetz & Schreier, 2012). Thus, it is possible to state that Crowdsourcing should, at least, accompany the internal idea generation process of a company (Poetz & Schreier, 2012). On the other hand, it is also important to underline that Crowdsourcing presents the following challenges:

- Creating a suitable team for idea evaluation and implementation (Martínez-Torres, 2012);

- The idea evaluation process can be complicated when crowds generate numerous ideas in a short period (Jouret, 2009);

- Individuals with great familiarity with a problem may lack creativity (Franke, Poetz & Schreier, 2013; Wiley, 1998);

- Managers tend to avoid Crowdsourcing because they are unsure about the appropriateness of the ideas submitted by crowds (Boudreau & Lakhani, 2013);

- Finding the best way to identify and support active users in submitting good ideas (Kristensson & Magnusson, 2010);

- In general, how to effectively conduct the process of idea generation from crowds is still a central issue for both practitioners and researchers (Schulze & Hoegl, 2008; Rohrbeck, Steinhoff & Perder, 2010).

With regards to this paper, it has managed to reach the purpose of exploring Crowdsourcing and its current examples (see Figure 2 below) by answering the above set of questions with literature. However, Crowdsourcing is a recent tool to obtain ideas, contents or services by asking for contributions from an online community, and its applications and literature are still in evolution. Finally, the main limitation of this project is a lack of “depth” in investigating Crowdsourcing, which is due to the word count limitation. At the same time, this paper offers a good overview of the phenomena to the readers. Thus, it can be used by both BrandBa’s users and the general public to get an overall understanding of Crowdsourcing, and to gain insights for further studies. Specifically, the above list of challenges has been proposed to motivate students and researchers in further investigating the fascinating topic of Crowdsourcing.

Figure 2. Main examples of Crowdsourcing Source. Author’s diagram

Reference list

Adams, S. A. (2011). Sourcing the crowd for health services improvement: The reflexive patient and “share-your-experience” websites, Social Science & Medicine, vol. 72, no. 7, pp. 1069-1076. Link:http://www.sciencedirect.com.ludwig.lub.lu.se/science/article/pii/S027795361100075X [Accessed 15 February 2016]

Afuah, A. & Tucci, C. L. (2012). Crowdsourcing as a solution to distant search, Academy of Management Review, vol. 37, no.3, pp. 355-375. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=76609307&site=eds-live&scope=site [Accessed 15 February 2016]

Aitamurto, T. (2011). The impact of crowdfunding on journalism: Case study of Spot.Us, a platform for community-funded reporting, Journalism Practice, vol. 5, no. 4, pp. 429-445. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=cms&AN=62668014&site=eds-live&scope=site [Accessed 15 February 2016]

Albors, J., Ramos, J. C. & Hervas, J. L. (2008). New learning network paradigms: Communities of objectives, Crowdsourcing, wikis and open source, International Journal of Information Management, vol. 28, no. 3, pp. 194-202. Link:http://www.sciencedirect.com.ludwig.lub.lu.se/science/article/pii/S0268401207001181 [Accessed 15 February 2016]

Amazon’s Mechanical Turk (2016). Amazon’s Mechanical Turk - Mechanical Turk is a market place for work, February 2016, Available Online: https://www.mturk.com/mturk/welcome [Accessed 12 February 2016].

Bayus, B. L. (2013). Crowdsourcing new product ideas over time: An analysis of the Dell Ideastorm community, Management Science, vol. 59, no. 1, pp. 226-244. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edswsc&AN=000313889500014&site=eds-live&scope=site [Accessed 15 February 2016]

Boudreau, K. J. & Lakhani, K. R. (2013). Using the crowd as an innovation partner, Harvard Business Review, vol. 91, no. 4, pp. 60-69. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com.ludwig.lub.lu.se/login.aspx?direct=true&db=bth&AN=86173288&site=eds-live&scope=site [Accessed 15 February 2016]

Brabham, D. C. (2009). Crowdsourcing the public participation process for planning projects, Planning Theory, vol. 8, no. 3, pp. 242-262. Link:http://plt.sagepub.com.ludwig.lub.lu.se/content/8/3/242 [Accessed 15 February 2016]

Bugs, G., Granell, C., Fonts, O., Huerta, J., & Painho, M. (2010). An assessment of public participation GIS and Web 2.0 technologies in urban planning practice in Canela, Brazil, Cities, vol. 27, no. 3, pp. 172-181. Link:http://www.sciencedirect.com.ludwig.lub.lu.se/science/article/pii/S0264275109001358 [Accessed 15 February 2016]

Chesbrough, H. W. (2003). Open Innovation: The new imperative for creating and profiting from technology, Harvard Business Press, Cambridge, MA. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=cat01310a&AN=lovisa.001646763&site=eds-live&scope=site [Accessed 15 February 2016]

Dahlander, L. & Wallin, M. W. (2006). A man on the inside: Unlocking communities as complementary assets, Research Policy, vol. 35, no. 8, pp. 1243-1259. Link:http://www.sciencedirect.com.ludwig.lub.lu.se/science/article/pii/S0048733306001387 [Accessed 15 February 2016]

Di Gangi, P. M., Wasko, M. & Hooker, R. (2010). Getting customers’ ideas to work for you: learning from Dell how to succeed with online user innovation communities, MIS Quarterly Executive, vol. 9, no. 4, pp. 213-228. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=58657253&site=eds-live&scope=site [Accessed 15 February 2016]

Doan, A., Ramakrishnan, R., & Halevy, A. Y. (2011). Crowdsourcing systems on the World-Wide Web, Communications of the ACM, vol. 54, no. 4, pp. 86-96. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=59582584&site=eds-live&scope=site [Accessed 15 February 2016]

Eagle, N. (2009). Txteagle: Mobile Crowdsourcing, in Internationalization, Design and Global Development, IDGD '09, Springer Berlin Heidelberg, pp. 447-456. Link:http://link.springer.com.ludwig.lub.lu.se/chapter/10.1007%2F978-3-642-02767-3_50 [Accessed 15 February 2016]

Enkel, E., Gassmann, O. & Chesbrough, H. (2009). Open R&D and open innovation: Exploring the phenomenon, R&D Management, vol. 39, no. 4, pp. 311-316. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=43538801&site=eds-live&scope=site [Accessed 15 February 2016]

Estellés-Arolas E. & González-Ladrón-de-Guevara F. (2012). Towards an integrated Crowdsourcing definition, Journal of Information Science, vol. 38, no. 2, pp. 189-200. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=74193159&site=eds-live&scope=site [Accessed 15 February 2016]

Franke, N., Poetz, M. K., & Schreier, M. (2013). Integrating problem solvers from analogous markets in new product ideation, Management Science, vol. 60, no.4, pp. 1063-1081. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=99110381&site=eds-live&scope=site [Accessed 15 February 2016]

Fritz, S., McCallum, I., Schill, C., Perger, C., Grillmayer, R., Achard, F. & Obersteiner, M. (2009). Geo-Wiki.org: The use of Crowdsourcing to improve global land cover, Remote Sensing, vol. 1, no. 3, pp. 345-354. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edsdoj&AN=d7212d66acd5d665aad573ba9a152312&site=eds-live&scope=site[Accessed 15 February 2016]

Geo-Wiki, (2016). Geo-Wiki - Engaging Citizens in Environmental Monitoring, February 2016, Available Online: http://geo-wiki.org/ [Accessed 12 February 2016].

Goodchild, M. F. & Glennon, J. A. (2010). Crowdsourcing geographic information for disaster response: a research frontier, International Journal of Digital Earth, vol. 3, no.3, pp. 231-241. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=a9h&AN=52998580&site=eds-live&scope=site [Accessed 15 February 2016]

Hilgers, D. & Ihl, C. (2010). Citizensourcing: Applying the concept of open innovation to the public sector, International Journal of Public Participation, vol. 4, no. 1, pp. 67-88. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=poh&AN=48757187&site=eds-live&scope=site [Accessed 15 February 2016]

Hossain M. & Zahidul Islam K. M. (2015). Generating ideas on Online Platforms: A Case Study of My Starbucks Idea, Arab Economic and Business Journal, vol. 10, no. 2, pp. 102-111. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edselp&AN=S2214462515000134&site=eds-live&scope=site [Accessed 15 February 2016]

Howe, J. (2006). The rise of Crowdsourcing, Wired, vol. 14, no. 6, pp. 1-4. Link: http://disco.ethz.ch/lectures/fs10/seminar/paper/michael-8.pdf [Accessed 15 February 2016]

Huberman, B. A., Romero, D. M., & Wu, F. (2009). Crowdsourcing, attention and productivity, Journal of Information Science, vol. 35, no. 6, pp. 758-765. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edb&AN=45566177&site=eds-live&scope=site [Accessed 15 February 2016]

Jouret, G. (2009). Inside Cisco’s search for the next big idea, Harvard Business Review, vol. 87, no. 9, pp. 43-45. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=43831018&site=eds-live&scope=site [Accessed 15 February 2016]

Kern, R., Zirpins, C. & Agarwal, S. (2009). Managing quality of human-based eservices, in Service-Oriented Computing–ICSOC 2008 Workshops, Springer Berlin Heidelberg, pp. 304-309. Link: http://link.springer.com.ludwig.lub.lu.se/chapter/10.1007%2F978-3-642-01247-1_31 [Accessed 15 February 2016]

Kittur, A., Chi, E. H., & Suh, B. (2008). Crowdsourcing user studies with Mechanical Turk, in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, pp. 453-456. Link:http://www-users.cs.umn.edu/~echi/papers/2008-CHI2008/2008-02-mech-turk-online-experiments-chi1049-kittur.pdf [Accessed 15 February 2016]

Kristensson, P. & Magnusson, P. R. (2010). Tuning users’ innovativeness during ideation, Creativity and Innovation Management, vol. 19, no. 2, pp. 147-159. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=50329574&site=eds-live&scope=site [Accessed 15 February 2016]

Labrecque I. Lauren, Jonas vor dem Esche, Charla Mathwick, Thomas P. Novak, & Charles F. Hofacker (2013). Consumer Power: Evolution in the Digital Age, Journal of Interactive Marketing, vol. 27, no. 4, pp. 257-269. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edselp&AN=S1094996813000376&site=eds-live&scope=site [Accessed 15 February 2016]

Littera Report (2016). Littera Report, February 2016, Available Online: https://www.litterareport.com/ [Accessed 12 February 2016].

Lutz, R. (2011). Marketing Scholarship 2.0, Journal of Marketing, vol. 75, no. 4, pp. 225-234. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=61237665&site=eds-live&scope=site [Accessed 15 February 2016]

Martínez-Torres, M. R. (2012). Application of evolutionary computation techniques for the identification of innovators in open innovation communities, Expert Systems with Applications, vol. 40, no. 7, pp. 2503-2510. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edselp&AN=S0957417412011943&site=eds-live&scope=site [Accessed 15 February 2016]

Mason, W., & Suri, S. (2012). Conducting behavioral research on Amazon’s Mechanical Turk, Behavior Research Methods, vol. 44, no.1, pp. 1-23. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=cmedm&AN=21717266&site=eds-live&scope=site [Accessed 15 February 2016]

Muthukumaraswamy, K. (2010). When the media meet crowds of wisdom: How journalists are tapping into audience expertise and manpower for the processes of newsgathering, Journalism practice, vol. 4, no. 1, pp. 48-65. Link:http://www.tandfonline.com/doi/abs/10.1080/17512780903068874 [Accessed 15 February 2016]

My Starbucks Idea (2013). My Starbucks Idea – Share. Vote. Discuss. See, February 2016, Available Online: http://mystarbucksidea.force.com/ [Accessed 12 February 2016].

Paolacci, G., Chandler, J. & Ipeirotis, P. (2010). Running experiments on Amazon Mechanical Turk, Judgment and Decision Making, vol. 5, no. 5, pp. 411-419. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edsdoj&AN=fb5c50ab8faf19b7ebd8f8b764241ea9&site=eds-live&scope=site [Accessed 15 February 2016]

Poetz, M. K., & Schreier, M. (2012). The value of Crowdsourcing: can users really compete with professionals in generating new product ideas?, Journal of Product Innovation Management, vol. 29, no. 2, pp. 245-256. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=70471380&site=eds-live&scope=site [Accessed 15 February 2016]

Policing-Act-Wiki(2007). Policing-Act-Wiki - Policing Act wiki gives Kiwis an innovative way to suggest the wording for a new Act of Parliament, February 2016, Available Online: http://www.police.govt.nz/news/release/3370 [Accessed 12 February 2016].

Rohrbeck, R., Steinhoff, F. & Perder, F. (2010). Sourcing innovation from your customer: how multinational enterprises use web platforms for virtual customer integration, Technology Analysis & Strategic Management, vol. 22, no. 2, pp. 117-131. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=49140581&site=eds-live&scope=site [Accessed 15 February 2016]

Rotman, D., Preece, J., Hammock, J., Procita, K., Hansen, D., Parr, C. & Jacobs, D. (2012). Dynamic changes in motivation in collaborative citizen-science projects, in Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work, ACM. Link:http://hcil2.cs.umd.edu/trs/2011-28/2011-28.pdf [Accessed 15 February 2016]

Schulze, A. & Hoegl, M. (2008). Organizational Knowledge creation and the generation of new product ideas: A behavioral approach, Research Policy, vol. 37, no. 10, pp. 1742-1750. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edselp&AN=S0048733308001492&site=eds-live&scope=site [Accessed 15 February 2016]

Schweitzer, F. M., Buchinger, W., Gassmann, O. & Obrist, M. (2012). Crowdsourcing: leveraging innovation through online idea competitions, Research-Technology Management, vol. 55, no.3, pp. 32-38. Link:http://www.tandfonline.com/doi/abs/10.5437/08956308X5503055 [Accessed 15 February 2016]

Scientific American (2016). Scientific American - Help make science happen by volunteering for a real research project, February 2016, Available Online: http://www.scientificamerican.com/citizen-science/ [Accessed 12 February 2016].

Silvertown, J. (2009). A new dawn for citizen science, Trends in Ecology & Evolution, vol. 24, no. 9, pp. 467-471. Link: https://povesham.files.wordpress.com/2014/10/073b5-silvertown20tree20200920citizen20science.pdf [Accessed 15 February 2016]

Stieger, D., Matzler, K., Chatterjee, S. & Ladstaetter-Fussenegger, F. (2012). Democratizing strategy: How Crowdsourcing can be used for strategy dialogues, California Management Review, vol. 54, no. 4, pp. 44-68. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=bth&AN=78584609&site=eds-live&scope=site [Accessed 15 February 2016]

Surowiecki, J. (2005). The Wisdom of Crowds – Why the Many are Smarter than the Few, New York: First Anchor Books Edition. Link:https://gsappworkflow2011.files.wordpress.com/2011/09/wisdom-of-crowds_surowiecki-1.pdf [Accessed 15 February 2016]

Swan, M., Hathaway, K., Hogg, C., McCauley, R. & Vollrath, A. (2010). Citizen science genomics as a model for crowdsourced preventive medicine research, Journal of Participatory Medicine, 2: article e20. Link:http://www.jopm.org/evidence/research/2010/12/23/citizen-science-genomics-as-a-model-for-crowdsourced-preventive-medicine-research/ [Accessed 15 February 2016]

Ubuntu (2016). Ubuntu - an open source software platform that runs from the cloud, to the smartphone, to all your things, February 2016, Available Online: http://www.ubuntu.com/ [Accessed 12 February 2016].

Westerski, A., Dalamagas, T. & Iglesias, C. A. (2013). Classifying and comparing community innovation in idea management systems, Decision Support Systems, vol. 54, no. 3, pp. 1316-1326. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edselp&AN=S0167923612003533&site=eds-live&scope=site [Accessed 15 February 2016]

Wexler, M. N. (2011). Reconfiguring the sociology of the crowd: exploring Crowdsourcing, International Journal of Sociology and Social Policy, vol. 31, no. 1/2, pp. 6-20. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com.ludwig.lub.lu.se/login.aspx?direct=true&db=p4h&AN=61918723&site=eds-live&scope=site [Accessed 15 February 2016]

Wiggins, A., & Crowston, K. (2011). From conservation to Crowdsourcing: A typology of citizen science, in System Sciences (HICSS), 2011 44th Hawaii International Conference, pp. 1-10. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com.ludwig.lub.lu.se/login.aspx?direct=true&db=edb&AN=80360120&site=eds-live&scope=site [Accessed 15 February 2016]

Wiley, J. (1998). Expertise as mental set: The effects of domain knowledge in creative problem solving, Memory & Cognition, vol. 26, no. 4, pp. 716-730. Link:http://ludwig.lub.lu.se/login?url=http://search.ebscohost.com.ludwig.lub.lu.se/login.aspx?direct=true&db=edb&AN=927744&site=eds-live&scope=site [Accessed 15 February 2016]